The Semantic Web

It’s hard to believe that just 25 years ago the idea of linking together databases so people could access information easily was limited to research universities and prescient sci-fi writers.

With the advent of the worldwide web and HTML, databases came to the masses in the form of pages. Pages could be made up of images, text, video and even links with web pages linking to other web pages. We no longer needed a librarian to retrieve facts nor the skillset of a researcher to tap into bodies of knowledge.

But with the advent of the broader Internet, information was liberated – and for many businesses and governments that leverage this data, the volume and complexity has spiralled out of control. Almost every business is a publisher and quickly good information was littered with bad information.

Authoritative websites might have excellent data quality – but struggled to organise information in an adequate way. All the progress of unleashing useful information morphed into a calamitous expanse of information of the world being dumped together. The reader continues to work to make sense and find their own way through the chaos.

But what if a search query yielded a link directly to the data itself – versus the page it resided on? With Google’s Knowledge Graph, you can ask for population and the answer appears. Google, Facebook with its Open Graph Protocol and the BBC are three organisations that are using linked data to have the computer deliver the exact data to users.

In order to make the machine “smarter” though, there needed to be a way for computers to understand how to find the data on a page within the metadata. This standard is called RDF (Reference Description Framework). As HTML presented a way to structure and relay information to the user, RDF provides information to the computer.

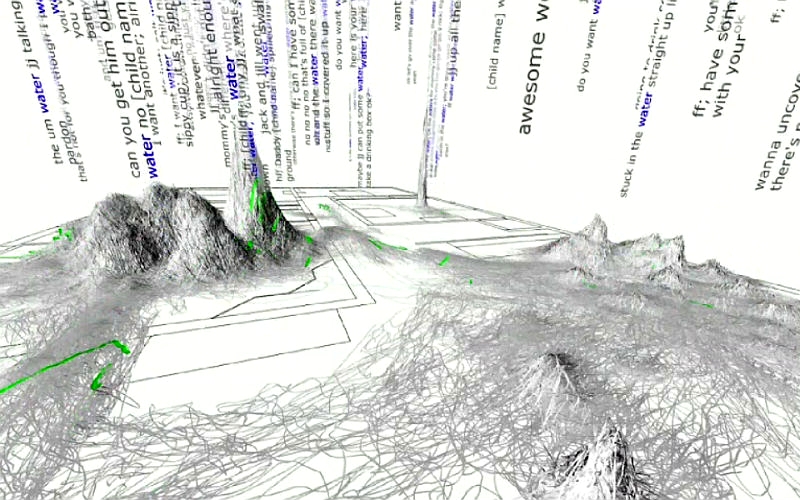

But databases only understand facts. In RDF, a fact is represented as a triple. A triple consists of a subject-object-predicate combination where the subject and the object both being entities – and the predicate describing the relationship. Using this simple model, information from any data source (spreadsheets, databases, XML documents, Web pages, RSS feeds, e-mail…) can be represented in a uniform way. Since all information is referenced via global URIs, any data source can refer to any other. Each triple easily can be combined with other facts and sources to form a web of information that you can assemble and reassemble.

So, if the machine knows that this picture is a map of England it links out to all the other “facts” about England – such as the capital city, its population, type of government, or the currency. Voilà. You have immediately created a logical piece of content made of facts all related to one another. And THIS is very powerful, very dynamic and very valuable.

There is a whole world of triples out there – free triple data sets that have been crowd-sourced or created by authoritative bodies. You can use this data – called public triples or Linked Open Data (LOD)– with your own private triples – if you have them.

The world of triples and linked data was coined as the Semantic Web – about a dozen years go by Tim Berners-Lee and as any good idea – the hype was high but the delivery left a lot to be desired. The Semantic Web was going to add context and intelligence to your data. But the leap from research lab into production was hard. Ironically, semantic linked data on its own lacked organisational context.

Public databases like the Open Government Data Initiative (OGDI) made available a wide body of public data (some in RDF form) but meshing it with internal data had been near impossible, as internal data was either in a relational database or in a document-centric database and triples needed their own triple store. To find the data in each database meant it needed its own index. It still required humans – or a tremendous amount of computing power and data modelling to put all the data together.

In more recent years, there has finally been a breakthrough with a combination database that allows all types of data, documents, values and triples – and their respective indices, to reside together. This combining of triples with other data types (public data and internal data, linked or not) to be combined in rich applications that can assist in risk management, decision support, knowledge management and reference data management.

This combination database allows ontologies (a domain or organisation’s framework of concepts and relationships that includes vocabularies, taxonomies, business rules) and data types to not only co-exist – but to inter-relate.

Let’s consider the amount of time spent rekeying information over and over again: a researcher wants to look up all the types of herbicides, next, look at a specific chemical within a herbicide, and a molecular compound within the chemical – and now see what other compounds it could be in. You could put all this into Excel, and begin cutting and pasting. Or you can download from linked open data sets – do a quick conversion from RDBMS to triple format – and quickly query to find the answer. One way is error prone, tedious and takes if not days, certainly many hours. The second way is a tremendous time saver – and accurate.

Or how about a group of researchers in a department are looking to know everything about drought-resistant crops for a given location. They can start to type in a search and get links with traditional tools. They then would make their own data map on how it all relates such as by key compounds that they need to further research and see how they fit together. With semantics, the researcher can access existing data maps and links.

This topic – drought – is related to many other topics in literature. These relationships represent an ontology – a organisation of topics that represent a way of thinking about this domain. So instead of doing searches, the researcher can interact with these ontologies and see what content and data is available and how it fits together. The researcher can then make new associations – and even share them – adding to the semantic web.

Not a researcher? How about a developer – constantly getting requests to provide reports from disparate systems? Instead of spending weeks and even months remodelling, what if you used RDF as the data model? By using RDBMS to RDF tools you can relatively easily migrate relational data to triples, speeding development by orders of magnitude.

If you have tremendous volumes of information that need to be updated as new information flows in, semantic dynamic publishing can be a tremendous solution. In airing the Olympics, the BBC had a dozen editors to develop, manage and update the pages for 10,000 athletes – their countries and their sports. Instead of having humans cull through the assets to lay out the pages, assets were dynamically added as certain conditions were met. The result? Seamless dynamic delivery with no additional headcount.

With the advent of technology that allows triples to be stored with other types of assets, industry applications are quickly evolving, including

- Financial services companies are exploring the impact of semantic triples on maintaining reference data for compliance and governance

- Intelligence agencies – looking to map social networks with intelligence reports

- Media – using semantic web technologies to dynamically publish sites

- Healthcare companies using semantics to simplify analytics around electronic patient records, prescription drugs and insurance data

With triples acting as the glue between documents and values, this webby way of linking things is putting the most contextually relevant facts on users’ fingertips – they way we envisioned it. The technology finally caught up with the aspirations.

Leave a Reply